Imagine: What if narrow AI fractured our shared reality?

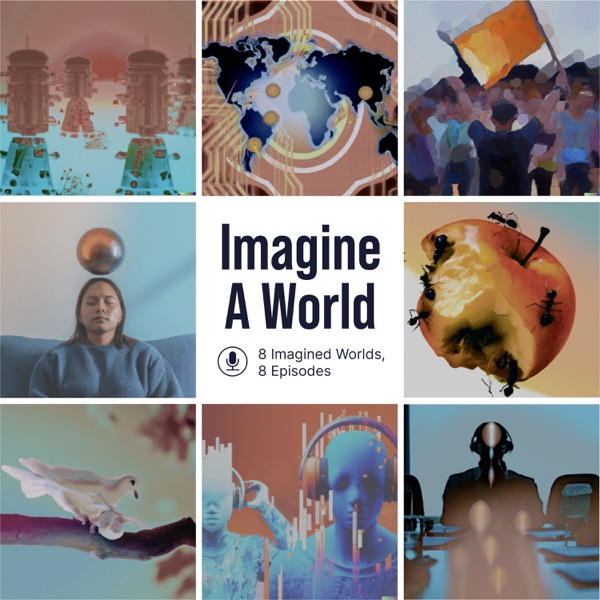

Imagine A World - A podcast by Future of Life Institute

Categories:

Let's imagine a future where AGI is developed but kept at a distance from practically impacting the world, while narrow AI remakes the world completely. Inequality sticks around and AI fractures society into separate media bubbles with irreconcilable perspectives. But it's not all bad. AI markedly improves the general quality of life, enhancing medicine and therapy, and those bubbles help to sustain their inhabitants. Can you get excited about a world with these tradeoffs? Imagine a World is a podcast exploring a range of plausible and positive futures with advanced AI, produced by the Future of Life Institute. We interview the creators of 8 diverse and thought provoking imagined futures that we received as part of the worldbuilding contest FLI ran last year. In the seventh episode of Imagine A World we explore a fictional worldbuild titled 'Hall of Mirrors', which was a third-place winner of FLI's worldbuilding contest. Michael Vasser joins Guillaume Reisen to discuss his imagined future, which he created with the help of Matija Franklin and Bryce Hidysmith. Vassar was formerly the president of the Singularity Institute, and co-founded Metamed; more recently he has worked on communication across political divisions. Franklin is a PhD student at UCL working on AI Ethics and Alignment. Finally, Hidysmith began in fashion design, passed through fortune-telling before winding up in finance and policy research, at places like Numerai, the Median Group, Bismarck Analysis, and Eco.com. Hall of Mirrors is a deeply unstable world where nothing is as it seems. The structures of power that we know today have eroded away, survived only by shells of expectation and appearance. People are isolated by perceptual bubbles and struggle to agree on what is real. This team put a lot of effort into creating a plausible, empirically grounded world, but their work is also notable for its irreverence and dark humor. In some ways, this world is kind of a caricature of the present. We see deeper isolation and polarization caused by media, and a proliferation of powerful but ultimately limited AI tools that further erode our sense of objective reality. A deep instability threatens. And yet, on a human level, things seem relatively calm. It turns out that the stories we tell ourselves about the world have a lot of inertia, and so do the ways we live our lives. Please note: This episode explores the ideas created as part of FLI's worldbuilding contest, and our hope is that this series sparks discussion about the kinds of futures we want. The ideas present in these imagined worlds and in our podcast are not to be taken as FLI endorsed positions. Explore this worldbuild: https://worldbuild.ai/hall-of-mirrors The podcast is produced by the Future of Life Institute (FLI), a non-profit dedicated to guiding transformative technologies for humanity's benefit and reducing existential risks. You can find more about our work at www.futureoflife.org, or subscribe to our newsletter to get updates on all our projects. Media referenced in the episode: https://en.wikipedia.org/wiki/Neo-Confucianism https://en.wikipedia.org/wiki/Who_Framed_Roger_Rabbit https://en.wikipedia.org/wiki/Seigniorage https://en.wikipedia.org/wiki/Adam_Smith https://en.wikipedia.org/wiki/Hamlet https://en.wikipedia.org/wiki/The_Golden_Notebook https://en.wikipedia.org/wiki/Star_Trek%3A_The_Next_Generation https://en.wikipedia.org/wiki/C-3PO https://en.wikipedia.org/wiki/James_Baldwin